Inside the LLM VAPT Process: How Experts Assess and Secure AI Models

Artificial Intelligence is transforming industries at an unprecedented pace. With the rise of powerful tools like ChatGPT, Claude, and other Large Language Models (LLMs), businesses are embracing AI to improve efficiency, customer service, and innovation. But as the use of LLMs grows, so do the risks. These models can be exploited in ways that traditional systems never faced, making LLM Vulnerability Assessment and Penetration Testing (VAPT) more critical than ever.

At DigiFortex , we specialize in securing AI systems through expert-led VAPT services tailored for LLM environments. In this blog, we’ll walk you through the entire process—how we uncover threats, assess risks using security models like STRIDE and DREAD, and help organizations secure their AI investments.

Why AI Security is a Top Priority in 2025

LLM VAPT is a specialized cybersecurity process designed to test and secure Large Language Models against potential vulnerabilities. Traditional security methods often fail to cover the unique challenges LLMs present. These include prompt injection attacks, data leakage, jailbreaking attempts (where users try to bypass model restrictions), and unauthorized API usage.

Unlike traditional applications, LLMs generate dynamic, context-driven output. This unpredictability can lead to exposure of sensitive information, manipulation of model behaviour, or even exploitation of connected systems and plugins.

What is LLM VAPT?

Deploying AI without proper security is like opening the gates of your digital fortress. Without thorough testing, LLMs can inadvertently reveal internal data, violate compliance norms like ISO 27001:2022 or GDPR, or be manipulated into generating harmful content. Whether you’re a fintech company using an AI chatbot or a healthcare provider processing patient data through LLMs—security cannot be an afterthought.

At DigiFortex, we’ve seen organizations risk millions in compliance penalties, customer trust, and operational disruption due to unsecured AI models. Our LLM VAPT service ensures your AI systems are resilient, trustworthy, and audit-ready.

Step-by-Step: How DigiFortex Performs LLM VAPT

Our LLM VAPT methodology is structured, comprehensive, and tailored to each deployment. We combine manual assessments, automated tools, and advanced threat simulations.

We begin by understanding your LLM’s architecture—whether it’s an open-source model, a cloud-hosted service, or integrated via APIs. Once we define the scope, we move into threat modelling to anticipate all possible attack vectors.

STRIDE Threat Modelling in LLM VAPT

To map potential threats effectively, we use the STRIDE model, which stands for Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, and Elevation of Privilege.

Spoofing involves impersonation attempts, such as pretending to be an admin user or an internal service to trick the LLM into sharing restricted responses.

Tampering means altering inputs, outputs, or system data—like crafting malicious prompts to modify how the model behaves.

Repudiation refers to a lack of traceability, where actions taken through the LLM can’t be logged or attributed, raising compliance concerns.

Information Disclosure is a critical threat in LLMs—where the model may leak sensitive internal data through carefully crafted queries.

Denial of Service occurs when a user inputs recursive or resource-heavy prompts to overload the model or degrade system performance.

Elevation of Privilege means exploiting the model to perform actions outside its intended permissions—such as executing unauthorized API calls or bypassing ethical filters.

By applying STRIDE, we map out your LLM's threat landscape and simulate real-world attack scenarios accordingly.

Prioritizing Risks with the DREAD Model

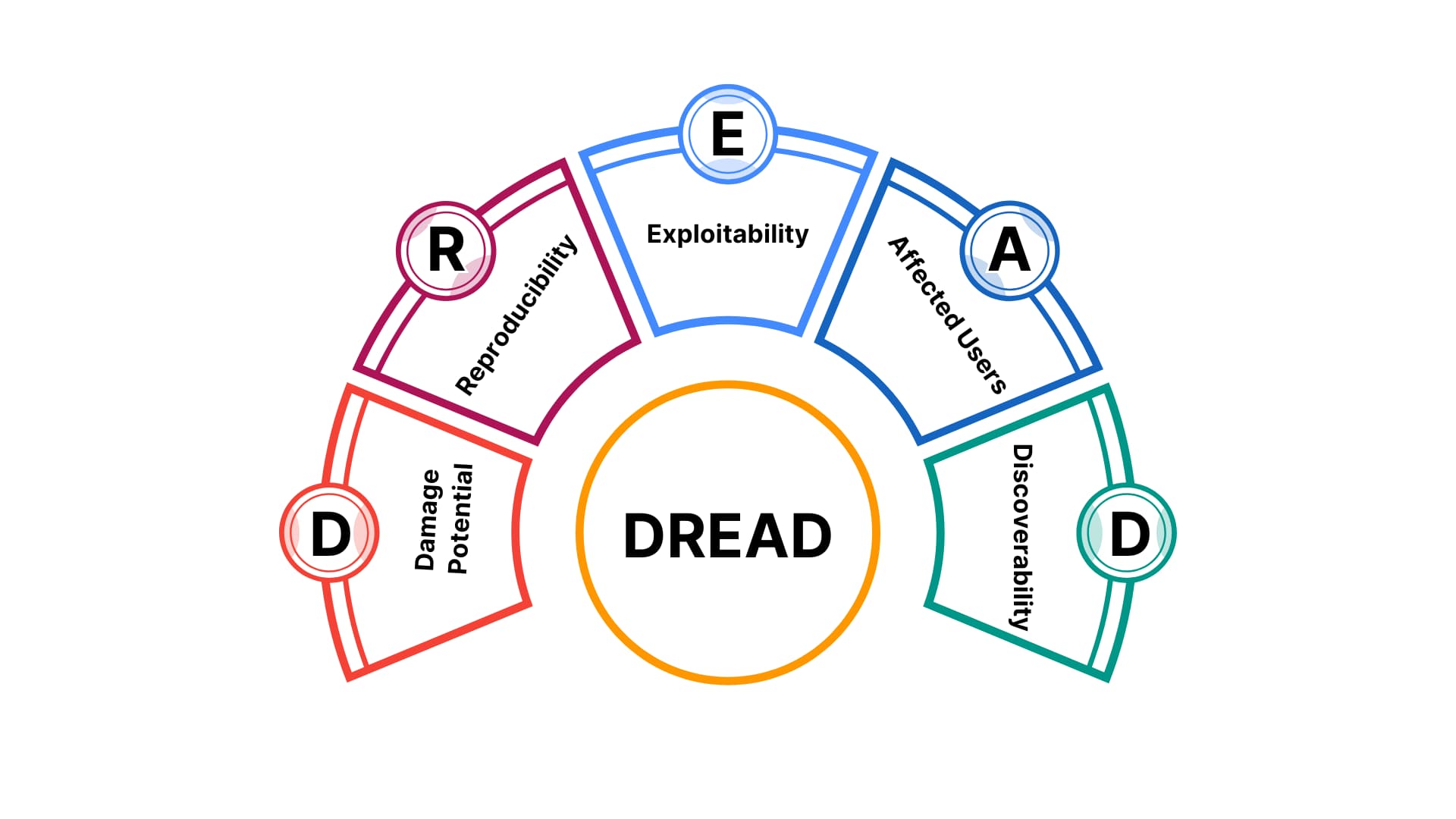

After identifying vulnerabilities, we prioritize them using the DREAD model, which evaluates each issue based on five key factors: Damage Potential, Reproducibility, Exploitability, Affected Users, and Discoverability.

Damage Potential assesses the possible impact if the vulnerability is exploited—for instance, leaking customer data or bypassing financial transaction limits.

Reproducibility examines how easy it is to recreate the attack consistently.

Exploitability looks at how simple it is for an attacker to launch the exploit, even without insider knowledge.

Affected Users considers the scale—whether the issue impacts a single admin or every end-user of the LLM.

Discoverability evaluates how likely it is for the flaw to be found by malicious actors in the wild.

DREAD helps us—and your team—focus on fixing the most dangerous vulnerabilities first, backed by logic and quantifiable risk scores

Real-World LLM Testing Techniques

- Prompt injection attacks Athat try to override system instructions.

- Jailbreaking attempts to remove ethical or policy filters.

- Data extraction techniques that trick the model into revealing training data.

- Role confusion attacks where a user manipulates the model into believing they have higher privileges.

- API abuse to access unauthorized data or perform restricted actions.

- Plugin and tool manipulation, particularly if the LLM has access to external services or databases.

We test both the core model and the surrounding environment—including API calls, databases, file systems, and third-party integrations.

Detailed Reporting and Expert Remediation

At the end of our VAPT process, DigiFortex delivers a comprehensive report that includes:

- A summary of discovered vulnerabilities

- Risk severity analysis based on STRIDE and DREAD

- Proof of concept for each issue

- Immediate and long-term remediation guidance

- Suggestions for model configuration, input/output filtering, and access control

We also offer post-assessment workshops with your developers and data teams to ensure that security recommendations are properly implemented without affecting your model’s performance or business use cases

Why Choose DigiFortex for LLM VAPT?

DigiFortex is among the first CERT-In empanelled cybersecurity firms in India offering specialized LLM VAPT services. Our credentials include ISO 27001:2022 certification and experience serving critical sectors like the Ministry of Defence, Ministry of Home Affairs, DRDO, Amazon, and IIMs. Our team includes CEH, CISSP, DCPLA, and AI security experts who understand both the model and the mission.

Whether you’re working with GPT, Claude, LLaMA, Falcon, or custom-trained models—DigiFortex provides the experience, tools, and trust you need to operate your AI systems securely and confidently.

Secure Your AI Before It’s Exploited

LLMs are powerful, but without security, they can become a gateway for data leaks, misinformation, or worse—regulatory violations.

Don't wait for an incident to occur. Get ahead with expert-led LLM VAPT from DigiFortex.

To know more: Click Here

DigiFortex is a Cyber Security company focused on enhancing Security, Governance, Risk, Compliance (GRC) and Privacy postures for enterprises. Our flagship offerings are GRC, Advanced Penetration Testing(VA/PT), Cloud Security (CNAPP), Next-Gen Security Operation Center(SOC), MSSP, v-CISO and products for advanced Security Assessments.

-

Get in Touch

© 2025 DigiFortex. All Rights Reserved.