The Four Giants of Data Privacy — and How They’re Bracing for the AI Storm

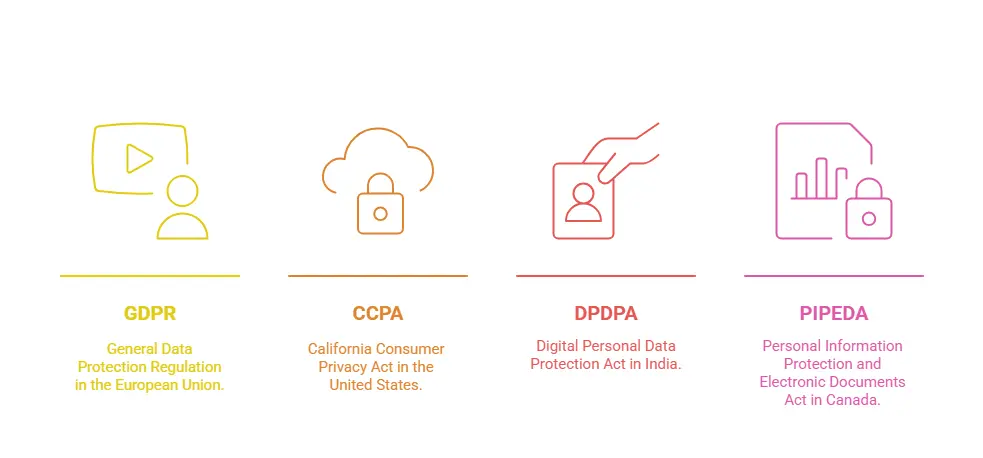

Let’s imagine the internet as a huge neighbourhood. In this neighbourhood, there are four experienced data privacy guards GDPR, CCPA, DPDPA, and PIPEDA.

They don’t protect houses or cars. They protect something even more valuable people’s personal data.

- GDPR – General Data Protection Regulation (European Union)

- CCPA – California Consumer Privacy Act (United States)

- DPDPA – Digital Personal Data Protection Act (India)

- PIPEDA – Personal Information Protection and Electronic Documents Act (Canada)

At first glance, these laws look similar consent, transparency, rights to delete data. But beneath the surface, they differ in scope, enforcement muscle, and how they’re preparing for the AI-powered future.

Scope & Reach — Who They Protect and Where

- GDPR: Protects any person in the EU, regardless of where the company is based. If a US or Indian company processes EU citizen data, GDPR applies. Even IP addresses and cookies can be “personal data” under GDPR.

- CCPA: Protects California residents and applies mostly to for-profit businesses crossing certain thresholds (like $25M annual revenue or 100,000+ consumers). Focuses heavily on the sale of personal data.

- DPDPA: Protects all individuals in India, applies to both Indian and foreign companies processing Indian data. “Data Fiduciary” and “Significant Data Fiduciary” classifications determine obligations.

- PIPEDA: Protects individuals in Canada for private-sector organizations engaged in commercial activity. Covers both personal data and electronic documents.

The Data It Covers — More Than Just Names and Emails

- GDPR: Covers any information related to an identifiable person — including biometric, genetic, location, and online identifiers.

- CCPA: Covers personal information and household-level data, such as purchase history, browsing activity, and inferences drawn about preferences.

- DPDPA: Covers digital personal data, and has provisions for handling “sensitive personal data” (financial, biometric, health).

- PIPEDA: Covers factual and subjective personal information — even opinions about a person.

AI’s New Privacy Dilemma

Artificial Intelligence can predict, profile, and personalize like never before — but it also brings new privacy challenges:

- Data Volume: AI models often train on huge datasets that might include personal data scraped from public sources.

- Inference Risk: Even if names are removed, AI can re-identify individuals by combining multiple data points.

- Opaque Decision-Making: AI systems can’t always explain why they made a decision — clashing with legal “right to explanation” requirements.

- Continuous Learning: AI models keep evolving, making it tricky to freeze or delete a specific individual’s data.

How Each Law Deals with AI

- GDPR :

- Article 22 restricts fully automated decision-making that significantly affects individuals.

- Demands “explainable AI” — people can request meaningful information on how an AI decision was made.

- Requires Data Protection Impact Assessments (DPIAs) before deploying high-risk AI

- CCPA:

- Provides a right to opt-out of automated decision-making and AI-driven profiling.

- Requires businesses to disclose AI use in privacy policies if it involves personal data.

- Expanding under CPRA (California Privacy Rights Act) to cover algorithmic transparency.

- DPDPA:

- Doesn’t yet have detailed AI clauses, but expected amendments will target AI bias, accountability, and consent in model training.

- Consent managers may become AI-audit checkpoints.

- PIPEDA:

- Encourages organizations to use AI responsibly and maintain human oversight.

- The proposed Consumer Privacy Protection Act (CPPA) would strengthen AI accountability and transparency.

Technical Compliance Challenges in AI Era

- Data Minimization: AI loves big data; laws require using only what’s necessary.

- Anonymization vs. Pseudonymization: Laws prefer anonymization, but AI models can sometimes “reverse engineer” identities from pseudonymized data.

- Model Auditing: Ensuring AI models aren’t biased, discriminatory, or storing personal data in hidden weights.

- Cross-Border Data Flow: AI training often happens in multiple regions — which can violate data residency rules.

- Real-Time Consent: Dynamic AI systems need to update permissions on-the-fly, not just once during signup.

Penalties if You Get It Wrong

- GDPR: Up to €20 million or 4% of annual global turnover — whichever is higher.

- CCPA: Up to $7,500 per intentional violation.

- DPDPA: Up to ₹250 crore for severe breaches.

- PIPEDA: Up to CAD $100,000 per violation (but new proposals aim for far higher).

The Future — Stronger, Smarter, AI-Aware Laws

In the coming years, data privacy regulations are expected to evolve into stronger, AI-focused frameworks. We’re likely to see GDPR-style AI-specific rules adopted across multiple countries, ensuring stricter oversight on automated decision-making and algorithmic transparency. International cooperation may lead to cross-border AI data transfer treaties, making global AI operations more legally consistent. Businesses will need to conduct AI risk assessments as routinely as cybersecurity audits, evaluating issues like bias, explainability, and data security. Additionally, transparency dashboards for AI — allowing individuals to see how their data is used, processed, and learned from — are likely to become mandatory, making AI systems more accountable and user-trustworthy.

To know more: Click Here

DigiFortex is a Cyber Security company focused on enhancing Security, Governance, Risk, Compliance (GRC) and Privacy postures for enterprises. Our flagship offerings are GRC, Advanced Penetration Testing(VA/PT), Cloud Security (CNAPP), Next-Gen Security Operation Center(SOC), MSSP, v-CISO and products for advanced Security Assessments.

-

Get in Touch

© 2025 DigiFortex. All Rights Reserved.